– Stochastic Course Notes

Monthly Archives: December 2014

Markov Chain — Branching Process

– Stochastic Course Notes

1. Definition

Consider a population

- Each individual produces j new offspring each period with probability

. Key assumption: This distribution is constant over time.

. Key assumption: This distribution is constant over time. - Assume that

for all j (i.e., the problem is not deterministic)

for all j (i.e., the problem is not deterministic) - Let

be the size of the population at period n

be the size of the population at period n

2. Number of Population in the n-th Generation

3. Probability of Die Out

4. Example: Family Genetics

Problem:

Markov Chain — Tendem Queue

Markov Chain – Classifications of States

1. Definition of States

- Communicate: State i and j communicate (

) if i is reachable from j and j is reachable from i. (Note: a state i always communicates with iteself)

) if i is reachable from j and j is reachable from i. (Note: a state i always communicates with iteself) - Irreducible: a Markov chains is irreducible if all states are in the same communication class.

- Absorbing state:

- Closed Set: a set of states S is a closed set if no state outside of S is reachable from any state in S. (This is like absorbing set of states)

: be the probability that, starting in state i, the process returns (at some point) to the sate i

: be the probability that, starting in state i, the process returns (at some point) to the sate i- Transient: a state is transient if

- Recurrent: a state that is not transient is recurrent, i.e.,

. There are two types of reurrent states

. There are two types of reurrent states - Positive recurrent: if the expected time to return to the state if finite

- Null recurrent: if the expected time to return to the sate i is infinite (this requires an infinite number of states)

- Periodic: a state is i period if

where k is the smallest number such that all paths leading from state i back to state i has a multiple of k transitions

where k is the smallest number such that all paths leading from state i back to state i has a multiple of k transitions - Aperiodic: if it has period k =1

- Ergodic: a state is ergodic if it is positive recurrent and aperiodic.

2. Example: Gambler’s Ruin

Markov Chain (Continuous Time)

Markov Chain (Discrete Time)

1. Definition

Let ![]() be a stochastic process, taking on a finite or countable number of values.

be a stochastic process, taking on a finite or countable number of values.

![]() is a DTMC if it has the Markov property: Given the present, the future is independent of the past

is a DTMC if it has the Markov property: Given the present, the future is independent of the past![]()

We define ![]() , since

, since ![]() has the stationary transition probabilities, this probability is not depend on n.

has the stationary transition probabilities, this probability is not depend on n.

Transition probabilities satisfy ![]()

2. n Step transition Probabilities

![]()

Proof:

3. Example: Coin Flips

4. Limiting Probabilities

Theorem: For an irreducible, ergodic Markov Chain, ![]() exists and is independent of the starting state i. Then

exists and is independent of the starting state i. Then ![]() is the unique solution of

is the unique solution of ![]() and

and ![]() .

.

Two interpretation for ![]()

- The probability of being in state i a long time into the future (large n)

- The long-run fraction of time in state i

- If Markov Chain is irreducible and ergodic, then interpretation 1 and 2 are equivalent

- Otherwise,

is still the solution to

is still the solution to  , but only interpretation 2 is valid.

, but only interpretation 2 is valid.

Splitting a Poisson Process: M/G/Infinity Queue

1. Notation

- M: “Markovian” or “Memoryless” arrival process (i.e., Poisson Process)

- G: General service time (not necessarily exponential)

: Infinite number of servers

: Infinite number of servers

be the number of customers who have completed service by time t

be the number of customers who have completed service by time t be the number of customers who are being served at time t

be the number of customers who are being served at time t be the total number of customers who have arrived by time t

be the total number of customers who have arrived by time t

2. Splitting the arrival process

- Fix a reference time T.

- Consider the process of customers arriving prior to time T.

- A customer arriving at time

is

is - Type I: if service is completed before T

- occur with probability

- Type-II: if customer still is service at time T

- occur with probability

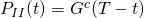

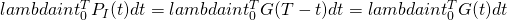

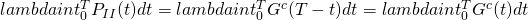

is a Poisson random variable with mean

is a Poisson random variable with mean  .

. is a Poisson random variable with mean

is a Poisson random variable with mean

and

and  are independent

are independent

for large t. Therefore,

for large t. Therefore,  is a Poisson random variable with mean

is a Poisson random variable with mean

is a Poisson random variable with mean

is a Poisson random variable with mean ![Rendered by QuickLaTeX.com lambda int^T_0 G^ct)dt = lambda E[G]](http://mytechroad.com/wp-content/ql-cache/quicklatex.com-2c5d36fa13d7d0d546ab1022413e9709_l3.png)

3. Example

Compound Poisson Process (CPP)

1. Definition:

Remove the restriction that two or more customers cannot arrive at the same time, (i.e., remove orderliness property)

2. Expectation:

3. Variance:

Non-Homogeneous Poisson Process (NPHH)

1. Properties:

- N(0) = 0

- N(t) has independent increments

- This is like a Poisson process, without the stationary assumption

2. Definition:

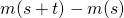

The mean value function (for a NHPP) is

3. Key Properties:

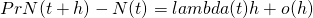

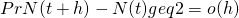

- For a NHPP, N(s+t) – N(s) is a Poisson random variable with mean

The Poisson Process

1. Priliminary Definitions

Def : A stochastic process is a collection of random variable (RV) indexed by time ![]() .

.

- If T is continuous set, the process is a continuous time stochastic process (e.g., Poisson Process)

- If T is countable, then the process is a discrete time stochastic process (e.g., Markov chain)

(that is, N(t) is non-negative integer)

(that is, N(t) is non-negative integer)- If

, the

, the  ( that is, N(t) is non-decreasing in t)

( that is, N(t) is non-decreasing in t) - For

,

,  is the number of events occurring in the time interval

is the number of events occurring in the time interval ![Rendered by QuickLaTeX.com (s,t]](http://mytechroad.com/wp-content/ql-cache/quicklatex.com-cc06eecde7462591e08662a960f6c9a0_l3.png) .

.

2. Definition of Poisson Process

Definition 1: A Poisson process is a counting process ![]() with rate

with rate ![]() , if:

, if:

- N(0) = 0;

- The Process has independent increments

- The number of events in any interval of length t is a Poisson RV with mean

.

.

Definition 2: A Poisson process is a counting process ![]() with rate

with rate ![]() if

if

- N(0) = 0

- The process has stationary increments

- The process has independent increments

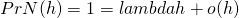

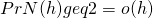

(# of events approximately proportional to the length of interval)

(# of events approximately proportional to the length of interval) (can’t have 2 or more events at the same time — “orderliness”)

(can’t have 2 or more events at the same time — “orderliness”)

- Eliminate assumption 2, Non-stationary Poisson Process

- Eliminate assumption 3, Mixture of Poisson Process (choose

randomly, then run a Poisson process)

randomly, then run a Poisson process) - Eliminate assumption 5, compound Poisson Process

3. Conditional Distribution of Event Times