1. Data Labels

- Supervised Anomaly Detection

- labels available for both normal data and anomalies

- similar to rare class mining

- Semi-supervised Anomaly Detection

- labels available only for normal data

- Unsupervised Anomaly Detection

- No labels assumed

- Based on the assumption that anomalies are very rare compared to normal data

2. Type of Anomalies

P

- Point Anomalies

- An individual data instance is anomalous w.r.t. the data

- Contextual Anomalies

- An individual data instance is anomalous within a context

- requires a notion of context

- also referred to as conditional anomalies

- Collective Anomalies

- A collection of related data instances is anomalous

- Requires a relationship among data instances

- sequential data

- spatial data

- graph data

- The individual instances within a collective anomaly are not anomalous by themselves

3. Evaluation of Anomaly Detection

- Accuracy is not sufficient metric for evaluation

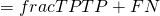

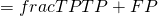

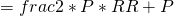

- Recall:

- Precision

- F-measure

- Recall (detection rate)

- ratio between the number of correctly detected anomalies and the total number of anomalies

- False alarm (false positive)

- ratio between the number of data records from normal class that are misclassified as anomalies and the total number of data records from normal class.

- ROC curve: a tradeoff between detection rate and false alarm rate.

- Area under the ROC curve (AUC) is computed using a tapeziod rule.

5. Classification Based Techniques

- Main idea: build a classification model for normal (and anomalous (rare)) events based on labeled training data, and use it to classify each new unseen event.

- Classification models must be able to handle skewed (imbalanced) class distribution

- Categories

- supervised learning techniques

- require knowledge of both normal and anomaly class

- build classifier to distinguish between normal and known anomalies

- semi-supervised classification techniques

- require knowledge of normal class only

- use modified classification model to learn the normal behavior and then detect any deviations from normal behavior as anomalous

- Advantage

- supervised learning techniques

- models that can be easily understood

- high accuracy in detecting many kinds of known anomalies

- semi-supervised classification techniques

- models that can be easily understood

- normal behavior can be accurately learned

- Drawbacks

- supervised learning techniques

- require both labels from both normal and anomaly class

- cannot detect unknown and emerging anomalies

- semi-supervised classification techniques

- require labels from normal class

- possible high false alarm rate

- previous unseen data records may be recognized as anomalies

6. Nearest Neighbor Based

- Key assumption

- normal points have close neighbors while anomalies are located far from other points

- Two approach

- compute neighborhood for each data record

- analyze the neighbor to determine whether data record is anomaly or not

- Categories

- distance-based methods

- anomalies are data points most distant from other points

- density-based methods

- anomalies are data points in low density regions

- Advantage

- can be used in unsupervised or semi-supervised setting (do not make any assumptions about data distribution)

- Drawbacks

- if normal points do not have sufficient number of neighbors the techniques may fail

- computationally expensive

- in high dimensional spaces, data is sparse and the concept of similarity may not be meaningful anymore

- due to the sparseness, distances between any two data records may become quite similar

- each data record may be considered as potential outlier

7. Clustering-based Techniques

- Key assumption

- normal data records belong to large and dense clusters, while anomalies do not belong to any of the clusters or form very small clusters

- Categorization according to labels

- semi-supervised

- cluster normal data to create models for normal behavior. If a new instance do not belong to any of the clusters or it is not close to any cluster, is anomaly.

- unsupervised

- post processing is needed after a clustering step to determine the size of the clusters and the distance from the cluster is required for the point to be anomaly

- Advantage

- No need to be supervised

- Easily adaptable to online/incremental mode suitable for anomaly detection from temporal data

- Drawbacks

- computationally expensive

- using indexing structures may alleviate this problem

- if normal points do not create any clusters the techniques may fail

- in high dimensional spaces, data is sparse and distances between any two data records may become quite similar

8. Statistics Based Techniques

- Data points are modeled using stochastic distribution

- points are determined to be outliers depending on their relationship with their model

- Advantage

- utilize existing statistical modeling techniques to model various type of distributions

- Drawback

- with high dimensions, difficult to estimate distributions

- parametric assumptions often do no hold for real data sets

- Parametric Techniques

- assume that the normal (and possibly anomalous) data is generated from underlying parametric distribution

- learn the parameters from the normal sample

- determine the likelihood of a test instance to be generated from this distribution to detect anomalies

- Non-parametric Techniques

- do not assume any knowledge of parameters

- use non-parametric techniques to learn a distribution

- e.g., parzen window estimation

9. Information Theory Based Techniques

- Computer information content in data using information theoretic measures

- e.g., entropy, relative entropy, etc.

- Key idea: outliers significantly alter the information content in a dataset

- Approach: detect data instances that significantly alter the information content

- require an information theoretic measure

- Advantage

- operate in an unsupervised mode

- Drawback

- require an information theoretic measure sensitive enough to detect irregularity induced by few outliers

- Example

- Kolmogorov complexity

- detect smallest data subset whose removal leads to maximum reduction in kolmogorov complexity

- Entropy-based approaches

- find a k-sized subset whose removal leads to the maximal decrease in entropy

10. Spectral Techniques

- Analysis based on eigen decomposition of data

- key idea

- find combination of attributes that capture bulk of variability

- reduced set of attributes can explain normal data well, but not necessarily the outliers

- Advantage

- can operate in an unsupervised mode

- Drawback

- based on the assumption that anomalies and normal instances are distinguishable in the reduced space

- Several methods use Principal Component Analysis

- top few principal components capture variability in normal data

- smallest principal component should have constant values

- outliers have variability in the smallest component

11. Collective Anomaly Detection

- Detect collective anomalies

- Exploit the relationship among data instances

- Sequential anomaly detection

- detect anomalous sequence

- Spatial anomaly detection

- detect anomalous sub-regions within a spatial data set

- Graph anomaly detection

- detect anomalous sub-graphs in graph data