Problem

If there are n candidates, and we do not want to interview all candidates to find the best one. We also do not wish to hire and fire as we find better and better candidates.

Instead, we are willing to settle for a candidate who is close to the best, in exchange of hiring exactly once.

For each interview we must either immediately offer the position to the applicant or immediately reject the applicant.

What is the trade-off between minimizing the amount of interviewing and maximizing the quality of the candidate hired?

Code

Analysis

We analysis in the following to determine the best value of k that maximize the probability we hire the best candidate. In the analysis, we assume the index starts with 1 (rather than 0 as shown in the code).

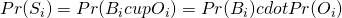

Let  be the event that the best candidate is the

be the event that the best candidate is the  -th candidate.

-th candidate.

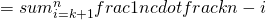

Let  be the event that none of the candidate in position

be the event that none of the candidate in position  to

to  is chosen.

is chosen.

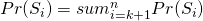

Let  be the event that we hire the best candidate when the best candidate is in the

be the event that we hire the best candidate when the best candidate is in the  -th position.

-th position.

We have  since

since  and

and  are independent events.

are independent events.

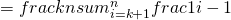

Setting this derivative equal to 0, we see that we maximize the probability, i.e., when  , we have the probability at least

, we have the probability at least  .

.

Reference

[1] Introduction to Algorithms, CLSR